Amazon.com Inc.’s cloud customers are clamoring to get their hands on the ChatGPT-style technology the company unveiled six weeks ago. But instead of being allowed to test it, many are being told to sit tight, prompting concerns the artificial intelligence tool isn’t fully baked.

Amazon’s announcement that it had entered the generative AI race was uncharacteristically vague, according to longtime employees and customers. Amazon Web Services product launches typically include glowing testimonials from three to five customers, these people said. This time the company cited just one: Coda, a document-editing startup.

Coda Chief Executive Officer Shishir Mehrota said that after testing the technology he awarded Amazon an “incomplete” grade. The company’s generative AI tools are “all fairly early,” he said in an interview. “They’re building on and repackaging services that they already offered.” Mehrota added that he expected AWS’s AI tools to be competitive long-term.

People familiar with AWS product launches wondered if Amazon released the AI tools to counter perceptions it has fallen behind cloud rivals Microsoft Corp. and Alphabet Inc.’s Google. Both companies are using generative AI — which mines vast quantities of data to generate text or images — to revamp web search and add AI capabilities to a host of products. The technology is unrefined and error-prone, but no one denies its potential to revolutionize computing.

Corey Quinn, the chief cloud economist at the Duckbill Group, a consulting firm that advises AWS clients, expressed what some were thinking about Amazon’s offering. “Feels like vaporware,” he said in an email, using the industry term for a product that’s touted to customers before it’s finished — and may not materialize at all.

Matt Wood, an AWS vice president of product, said in an interview that Amazon’s generative AI software was new, not retooled. “We’ve got the product to the point where we wanted to let customers know what we’re working on and wanted to invite some customers to take it for a spin and give us some feedback as we go,” Wood said. “The idea that this is rushed or incomplete in a way which is careless, I would push back very heavily on that. That’s not our style at all.”

AI Leader

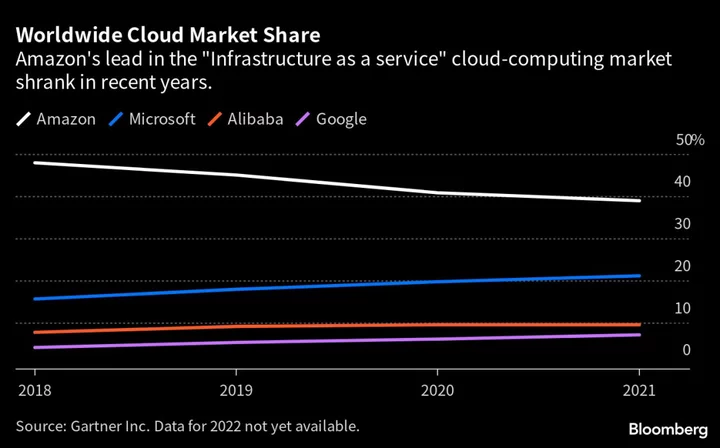

AWS currently has a commanding lead over Microsoft and Google in cloud computing. But with generative AI in their arsenals, Amazon’s chief cloud rivals could lure away its customers with a range of new services, from summarizing and generating documents to spotting and describing trends in corporate data.

Given Amazon’s deep expertise and competitive zeal, it would be premature to count the company out at this early stage. The Seattle-based giant has long been considered a leader in artificial intelligence and uses it for a wide range of critical tasks. Software selects how many products to order for sale on Amazon.com, estimates how many workers Amazon’s warehouses will need and plans routes for delivery drivers. AWS products identify faces in images or video, automatically monitor industrial equipment and extract text from medical records.

Read more: Amazon Plans to Add ChatGPT-Style Search to Its Online Store

But four current and former Amazon employees said Amazon’s intense focus on serving its customers means the company sometimes has given short shrift to the pure research undertaken by OpenAI, Microsoft and Google — the kind of work that can lead to breakthroughs like ChatGPT. The company stands by its “customer-obsessed science” approach, which it says helps recruit researchers who want to tackle meaningful challenges. In recent years, Amazon has also sought to partner more with university researchers and let employees publish more academic papers.

The company’s investments in large language models, the technology used to train AI-based tools, still emphasized practicality, however. One model helps surface products on the web store that a shopper might not find in a keyword search. A second set of models, disclosed in a research paper last year, help train the Alexa voice assistant to better respond to commands in various languages. In mid-November, Amazon made the Alexa models available to AWS customers.

Two weeks later, OpenAI released ChatGPT and ignited a viral frenzy. People rushed to try out the chatbot and post their interactions on social media. Business leaders wondered how quickly the technology would be packaged into products. They began and asking AWS and its partners how their capabilities compared, according to people familiar with the situation. Before that, no customers were asking for a chat interface to a large-language model, said one of the people, who, like others interviewed for this story, requested anonymity to speak freely about a private matter.

The threat to Amazon Web Services became evident in January when Microsoft, already an OpenAI partner, invested an additional $10 billion in the closely held startup. Microsoft’s Azure cloud-computing service is No. 2 behind AWS. If businesses wanted the latest and greatest from OpenAI, Azure was the place to go. “Microsoft does own that corner right now,” said James Barlow, founder of Triumph Technology Solutions LLC, which helps companies use AWS.

Read more: The Tech Behind Those Amazing, Flawed New Chatbots: QuickTake

Amazon announced its response on April 13, the same day Chief Executive Officer Andy Jassy published his annual letter to shareholders. A new AWS service called Bedrock would let companies tap into models built by Amazon partners such as Stability AI, AI21 Labs and Anthropic. The company also disclosed that it had built two of its own large language models, called Titan. One took a similar approach to the work by Amazon’s product search team, and the other is a ChatGPT-like text generator, built to create summaries of content and compose emails or blog posts, among other tasks.

In his letter, Jassy wrote that Amazon had been working on large language models for a while and said that the company believes “it will transform and improve virtually every customer experience.” He pledged to continue substantial investments in the technology.

Wood said he has spent the vast majority of his time during the last six months working with generative AI product teams and customers. “Across AWS we have teams that are jamming on new capabilities and experimenting and prototyping and ideating using this technology,” he said. “Most of the customers that I’ve spoken to have been off-the-charts excited about generative AI, our vision for it and the capabilities of Bedrock.”

But most customers haven’t had a chance to test the technology because they need to secure approval from Amazon first — a highly unusual demand, according to four people familiar with AWS product launches. Typically the cloud services arm provides broad access to its tools, even when engineers are still finalizing them, for fear of seeming to favor one customer over another or risking the impression the product isn’t ready, these people said.

Wood said limited previews were “pretty normal” for the company and cited three instances when products were initially released to a select group of customers.

Jim Hare, a distinguished vice president and lead Amazon analyst at Gartner Inc., said he has yet to speak to a customer who has tried the new AI tools. “It felt to me like, I don’t want to say rushed, but they felt they had to put something out as their response to what everyone was talking about with Google and Microsoft with OpenAI,” he said in an interview. He and others noted the Bedrock announcement lacked typical documentation such as technical guides and demos.

One possible explanation is that Amazon, mindful that the OpenAI and Google chatbots often get things wrong, wants to make sure its technology is sufficiently polished before pushing it to longtime customers. Company executives and researchers have also said the tools need to be secure and capable of protecting sensitive customer data. Amazon isn’t alone in keeping a tight leash on its generative AI models. Companies wanting to try out similar technology from Meta Platforms Inc. and International Business Machines Corp. also must receive permission.

Executives at two AWS customers said they’ve been promised access to the large-language models by June. Wood declined to say how many customers were using Bedrock, or when new ones might be accepted. “We’re gonna onboard new customers as we go,” he said. In the meantime, Amazon is looking to capitalize on the explosion of interest in generative AI, holding forums featuring AWS salespeople. “Customers are asking about it regularly,” Barlow said.

Amazon has also announced deals with a few companies that said they’ll use the services, including Deloitte, which said it’s in the “initial stages” of testing them, as well as 3M.

Another is Royal Philips NV, the Dutch medical technoogy firm. Shez Partovi, the company’s chief innovation and strategy officer, said Philips aims to use Bedrock to test whether Stability AI’s image-recognition tools could help radiologists flag problematic patient scans to more quickly identify illness.

“The technology has passed a precipice,” said Partovi, a former AWS executive. “We do believe there is a bona fide value to medical imaging, as services are released like Bedrock.”

Philips hasn’t used Amazon’s Titan models, Partovi said, and a spokesperson added that the company is currently “more focused on learning than evaluating” Bedrock’s performance.

In a potentially ominous sign for Amazon, Partovi said Philips is experimenting with ChatGPT. “If there is a service out there with another cloud provider that will advance our proposition – and is not offered by our provider – we’re going to use that service,” he said.