AI voice tech company Supertone wants to "change the paradigm of the creative process," says CEO Lee Kyogu, and it's starting with K-pop.

Supertone can replicate, perfect, or generate original voices to help artists bypass the "repetitive recording and editing processes" and realize ideas that, until now, "only existed in their minds." Lee Hyun, an established Korean ballad singer, dreamed of communicating with international fans while reimagining his image. Together with Supertone, he created MIDNATT, an alter ego that sings in six languages using the power of AI.

SEE ALSO: This K-pop artist uses AI to sing in 6 languagesMIDNATT released his first single, "Masquerade," in May. Over email, Lee and MIDNATT (AKA Lee Hyun) told Mashable more about how the project came to be.

A press release stated that MIDNATT "is bolder and more honest than Lee Hyun." What is MIDNATT more honest about? In what ways does technology help you be more honest?

MIDNATT: I tried to put my own stories and feelings into the music as honestly as possible. It reflects both the ambition and fear I had of showing this new side of myself as an artist to the public. The technology was applied to the track to bring this story to life. It’s something completely new that I’ve taken on, but I wanted to take on the challenge knowing that it could expand my musical spectrum. [Through it], I was able to express my sound and message in much more diverse ways.

From what I understand from speaking to HYBE IM CEO Chung, Supertone developed a pronunciation technology specifically for this project. Can you tell me more about that process?

Lee: We call this "multilingual pronunciation correction technology." This technology corrects a person’s pronunciation so that they sound more natural and fluent, despite never having spoken a particular language before. Through this technology, anyone can speak or sing naturally in any language, overcoming language barriers and delivering the precise emotion and meaning contained in a song or speech.

For Project L, we went through the following process: First, the artist recorded the track in six different languages. Of course, his pronunciation was not perfect at this stage. Then, each native speaker narrated the same content or lyrics. Finally, by applying Supertone's technology, we were able to extract the native pronunciations and replace the linguistic content contained in the artist's recording. As a result, the audio retained the artist's timbre or voice characteristics, while the pronunciation captured the fluency of a native speaker.

You made use of a specific Supertone technology that adjusted your pronunciation in foreign languages. How were the six languages chosen?

MIDNATT: We used the voice technology with the hope of easing the language barriers that global fans might feel when listening to a foreign-language song. The six languages used on the track cover up to 8 billion people worldwide. I wanted to make music that resonates with as many people as possible.

How did you study each language to learn the details of its pronunciation? Which language was the hardest to master?

MIDNATT: Prior to this project, I’ve never had proper education with the five languages that are foreign to me [English, Japanese, Chinese, Spanish, and Vietnamese]. At first, I listened to a recording of native speakers’ pronunciation and imitated it. From there, I just practiced it over and over. Thankfully, I had staff around me who are fluent in various languages, so I got a lot of help from them as well. Chinese language has certain pronunciations that don't exist in Korean, so recording in Chinese was especially tough. Also, English is thought of as a universal language, so I also paid a lot of attention to delivering English in a native speaker’s pronunciation as closely as possible.

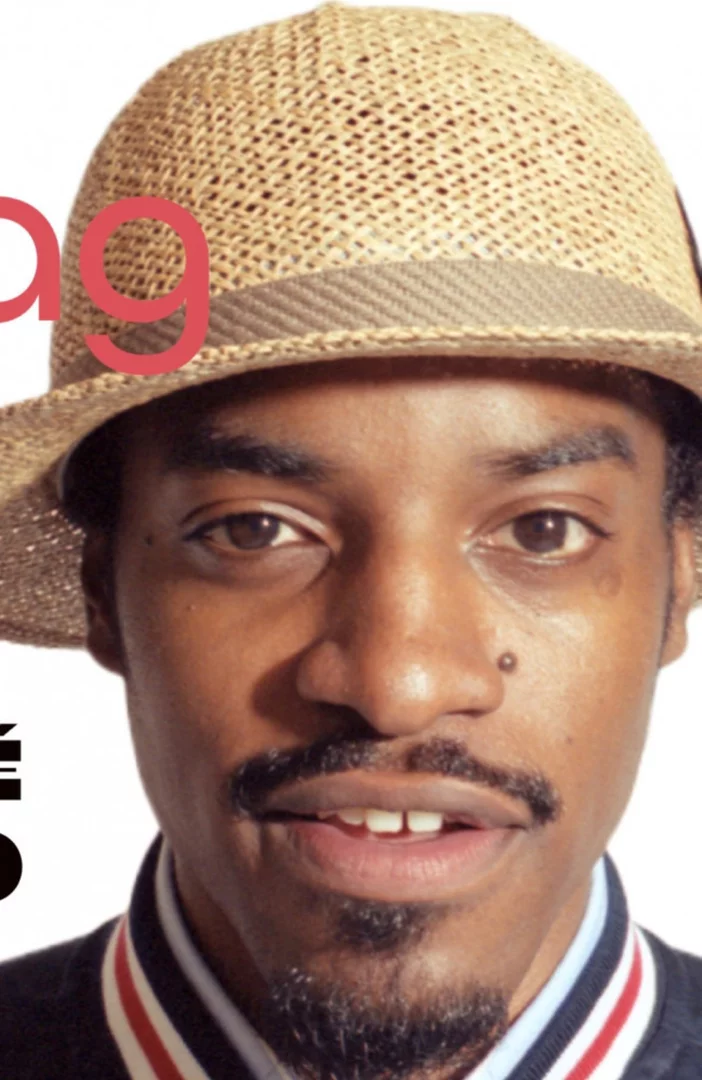

Lee Hyun brought his alter ego MIDNATT to life using AI voice tech developed by Supertone. Credit: HYBELee Hyun, your voice was transformed into a woman's voice for "Masquerade." There are so many ways that voice could have sounded; it could have had a number of different timbres, a roughness or softness, etc. How did you decide what the "female" version of MIDNATT should sound like?

MIDNATT: The female voice in "Masquerade" expresses another ego of mine. It represents my past self, and because there are multiple egos that I wanted to express in the track, we figured that using a female voice, on top of my own, would deliver the message of the lyrics much better. It started with me thinking what a female vocalist who has a similar vocal style as me would sound like. But the addition of a female voice wasn’t a decision that was only made by me, [it was] born out of my collaboration with Hitchhiker.

Lee: Supertone listened to the demo version of "Masquerade" and designed a female voice using Face2Voice technology®. Hitchhiker then used Supertone's reference and other data that helped to design the female version of MIDNATT's voice.

How did the idea of using Supertone's tools come up in discussions around MIDNATT? Who brought it up, and how did you learn about how it could benefit the artist before deciding to pursue it?

Lee: Hitchhiker stated that when an artist sings, they try to express and convey the meaning of the song and the message in the lyrics in their unique style. Artists strive to sing in multiple languages to connect with global fans. However, if the pronunciation is not perfect, it can curtail immersion and even misinterpret the song. As a result, he thought about a technology that could correct pronunciation without damaging the original meaning of the song. Although MIDNATT understood the accompanying uncertainties given the fact that this was HYBE's first collaboration between technology and entertainment, he viewed it as an opportunity for new challenges and chose to participate in this project, especially as fans' expectations continued to rise.

The extended reality (XR) technology used to create the "Masquerade" music video is almost as novel as the tech used to correct your pronunciation. You've shot many music videos in your career, how was this process different? What do you see as the advantages to using this kind of technology in music video production?

MIDNATT: The music video for "Masquerade" used a completely different method of shooting so I was in constant amazement. If I have to pick one major difference [between shooting this music video and past ones], I’d say that there are a variety of backdrops in the video, but the majority of them were shot at the same spot without having to travel to a different location. In the past, if we had a particular backdrop in mind, we had to find an actual location that looks like it. But now, with the help of technology, we can create a backdrop we want much more freely. I believe that the use of technology like this is something that enables a greater level of creative expression.

Have you found other uses for AI in your daily life? For example, do you use ChatGPT or any generative image AI? Or maybe you're more into VR or gaming?

Lee: That's interesting. In fact, I sometimes use GPT-4 while working on documents. But I haven't had the chance to directly use any image or video-related generative AI. However, I'm indirectly using AI every day. When I listen to music or watch videos on YouTube, the content recommended to me is also based on AI algorithms. Semi-autonomous driving in cars can be another example.

MIDNATT: I don’t do much gaming, but if I have to pick, I enjoy VR games. The tech that I use the most in my day-to-day life, I would say, are probably the various apps and functions on my smartphone.

Much of the listener and media focus on MIDNATT's debut was on the AI element of the project. What are your thoughts on AI being seen as a "gimmick?" How can it be incorporated into music in a way that does not alienate listeners and disadvantage artists?

Lee: Of course, such concerns might exist. But just like many other technologies, we firmly believe that AI can be used as a fantastic tool to maximize the creativity of true creators and artists. The invention of the electric guitar fostered incredible artists like Jimi Hendrix, and Daft Punk used synthesizers in their own creative ways to bring innovation to electronic music. Also, judging from the fan reactions after the unveiling of Project L, we believe it stands to present a positive value of art integrating AI technology.

MIDNATT: I had a clear direction of what I'd like MIDNATT’s debut project to be. I went into the project thinking even if we showcase the latest technology and a trendy sound, it should not take away anything from my unique story and authenticity as an artist. We worked on the track and applied the technology in a way that still maintains the uniqueness of my voice and gives it a diverse means of expression. I’m very grateful that my fans understood the intention and sincerity behind it.